Last updated on

January 29, 2024

Nothing misses the eye of the customer. Email marketers dread the moment they hit the send button on an email with major typos. A/B testing the emails is one of the time-consuming tactics for sure, but when it comes to improving the email marketing campaigns, it helps you stay focused to meet the goals. You already understand your audience, which is great, but their needs and wants to keep getting better make an email design obsolete for future campaigns.

Running A/B test becomes vital to analyze new techniques or formats for the email campaigns. Improving conversion rates can make a difference in your marketing efforts. Modern email marketing tools are equipped with A/B testing features such as scheduling a campaign or personalizing the template.

Learn in-depth how email marketing A/B testing works so that you can optimize your campaign strategies.

Pretend you are a restauranteur, but due to flat revenue for new months, the temptation to update the menu to see if it generates more sales is more than ever.

So, you create a new menu that includes some high-quality images of your signature items.

Everything else on the menu remains the same. You make 30 copies of the new menu and the old drop-down version placing them side by side. Split the restaurant set so that half of the patrons receive an old menu while the other half receive the new one.

After a few days, you tally the revenue and compare the sales generated from both menus. The menu with the highlighted image of the signature dish increased the table per tick by 20%. There’s your winner!

This is what A/B testing is all about.

Also known as split testing, it means sending two different versions to the audience in the sample audience. It could be done for any marketing tactic like sending email campaigns, creating landing pages, forms, popups, etc. So, the more engagement you receive on a specific version, that would be considered a winner.

Email marketing forms the relationship marketing between brands and their existing and potential customers. It enables businesses to reach out to mass audiences and track each email campaign's metrics.

Because email marketing offers a personalized form of marketing, it is cost-effective. One can expect higher ROIs with email marketing than any other tactic.

So, email A/B testing is a sufficient tool marketer can use to improve overall email marketing performance. Experimenting with the emails allows you to compare and contrast the best and worst elements within an email.

It also allows marketers to gauge the impact their emails have on the subscribers based on reactions like engagement rate, click-through rate, open rate, and conversions.

So with an efficient A/B testing process, marketers can test different templates and designs that works best for the products.

Those reconsidering conducting A/B testing need to consider the following measures.

It may come as a surprise when we say at least 39% of brands do NOT test their segmented emails. Ouch!

There’s more to email marketing strategy than sending emails to the subscriber’s list. Every change counts, especially when A/B tests the email marketing campaigns. The idea is to have a competitive edge over the brands that are not aggressively practicing email marketing.

Small changes within the email layout make a huge difference. For instance, even a customized sender name used to send an email will have higher open rates than a generic company name. The difference can be seen with a 0.53% higher open rates and a 0.23% increase in click-through rates.

It may not seem a daunting number, but even the slightest figures can have a huge impact on revenues in email marketing.

The better email version becomes part of the marketing campaigns. Therefore A/B test is a must.

Email marketing is a vast industry that encourages content localization according to the region and time zone differences. Therefore you will be amazed to discover different variations of the term A/B testing used by the marketers.

It is the specific element of the campaign that marketers want to test. With A/B testing, these variables can be identified. The common variables include the subject line, from name, content, and send time.

Each variable is also known as variation.

The identified variables drive the variations in the campaign. If you want these common variables, create four different combinations of email campaigns to find out the best version. The combination sent out in the emails is called test combinations.

At this stage, the results generated from test combinations are evaluated. Results are compared to which variable version yielded higher results. The winning combination is easier to determine this way. It can be done manually or automatically using an email marketing tool.

So, the campaign with higher results performs the best. It can be determined based on the metrics like click rate, open rate, total revenue, etc. You can manually choose the metrics of your choice and create a report consisting of the data.

Email marketers should stress testing emails by viewing a preview or sending a test email. It is important to highlight any errors that may otherwise impact the progress. Therefore we have identified rules that also act as guidelines to test emails.

What is the main intent behind marketing emails? Every email marketer should concern themselves with the goal and the type of email they want to send. For instance, a marketer may want to send onboarding emails for a new user to boost open and click-through rates. If they succeed, the email will gain unique views.

There are a few common metrics that all marketers are inclined to observe, such as:

Reasons to test emails could vary from goal to user intent. For instance, a higher click-through rate on a CTA means onboarding emails will generate higher revenue. Or an improved open rate means a higher user count that could lead to more engagement.

What do you think, the A/B test would work better for - “Happy Holidays!” or a “Thank you Email”? Think it this way: marketers have to wait for at least 12 months to send holiday emails to users. However, split testing works perfectly for frequently sent emails like Thank you Email.

Almost 97% of the brands use emails to interact with first-time buyers, or existing customers interact with a thank you email for signing up or a thank you for purchasing email.

Remember, email services and clients change more frequently, creating a higher uncertainty in testing holiday-based or seasonal emails. The key challenge favors frequently sent emails because of the sheer number of email samples sent for testing.

What if your email marketing software doesn’t offer A/B testing? You can develop a manual test of your own by splitting the subscriber's list into two categories. You can download the subscriber list and extract the data using an Excel sheet. Another easier way to list splitting is to arrange the names in alphabetical order in the spreadsheet.

You are in good hands if you are using an upgraded email marketing platform.

Sometimes, the collected samples are large in numbers, presenting a challenge to marketers. It can be remedied with smaller sample size.

Therefore test only the most prominent variables with a high impact on the metrics like CTAs and subject lines. Even there’s a huge difference in HTML emails vs. plain-text emails. So make changes that are easy to place.

We identified four common variables to A/B test an email. However, you need to avoid overdoing the test combinations. So unless you are testing each variant over 50,000 emails, keep the test combinations limited to two variants.

It helps identify the key elements within the email design that works best for the marketing campaign. The chosen variables should be chosen if you believe they will increase performance.

Usually, a sharp decline is seen after 4-5 days of sending an email. If, after 5 days, the sent email has no profound effect or is unable to make a profound difference, they come up with a different A/B test.

Therefore to measure the outcome of the test. Decide the metrics you want to measure for the A/B test. So create a model of raw data you will have to calculate in a spreadsheet.

For instance if trying to increase the conversion rates then your success metric must be conversion rate. You may be tempted to focus on multiple success metrics but its recommended to decide only one that is important after the test is ended.

Marketers should have enough volume to make the data count for statistically significance. The volume needed depends not only the groups but also on the results and the difference between them.

For instance you sent an email to 1,000 people and click rate is the success metric, so the volume you need to achieve will depend on the number of clicks and the difference you set as the percent of the test size.

So you dispatched emails to 70,000 subscribers. 5,500 of them opened version A, and 5,600 of them opened version B. surely the B version is the winner, right?

Hold up your horses.

You need to be careful about who you draw out to be a winner in the A/B test. Since you are testing only with one or two variables eliminate the variables in audience selection process. You can pick the test groups randomly. Do not apply pseudo-random process that could otherwise skew the results.

When A/B testing a user typically lands on the original URL but is redirected to a new URL variation (if its a test group. Marketers follow this practice to preserve the original URL as your control.

But using 302 (temporary) redirect when redirecting the users is the good way to go. A 301 signals to Google that original page is removed and replaced with the test page. This change affects the A/B testing for the page.

But a 302 redirects the original page and does not pass any link to the temporary page.

Usually one of the most neglected elements in testing is documentation. A diligent marketer should be fanatic about documenting the tests and results and should compare documents to get good results.

It helps to build upon the prior lesions, not repeat the test mistakes you did, and educate your team and successors more successfully. Even if you are not going to publish the results, sharing that information in a short blog is a great way to document the results so that you don’t forget them.

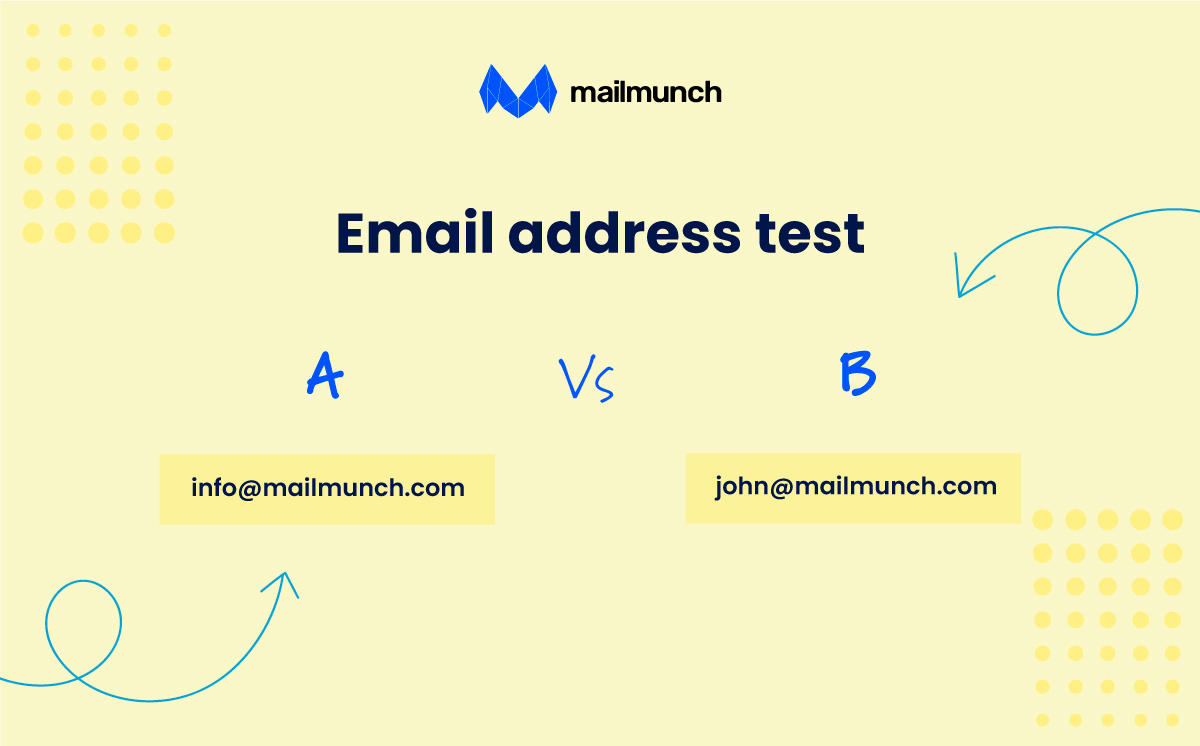

So, did you see a higher email click-through rate when it was sent with the name John instead of a generic company name?

Talk about personalization. Using the sender’s name or address to send emails can generate higher click-through results by 0.73%. It will surely drive more conversions or clicks than an automated email. For this purpose, you need to conduct an A/B test that a person sent emails to and see whether it is likely to have more clicks or not.

For instance, you can try different versions of the sender’s name or from.

So what can be the most important tool to test the subject lines? Is a long subject line more imperative to use than a shorter one? If not, how will you test the results?

Usually, it is seen that longer titles receive a higher open rate. But keeping the intent and time of the year, using a short email subject line could suffice. The standard subject line is around 40-50 characters. And that’s the sweet spot for marketers.

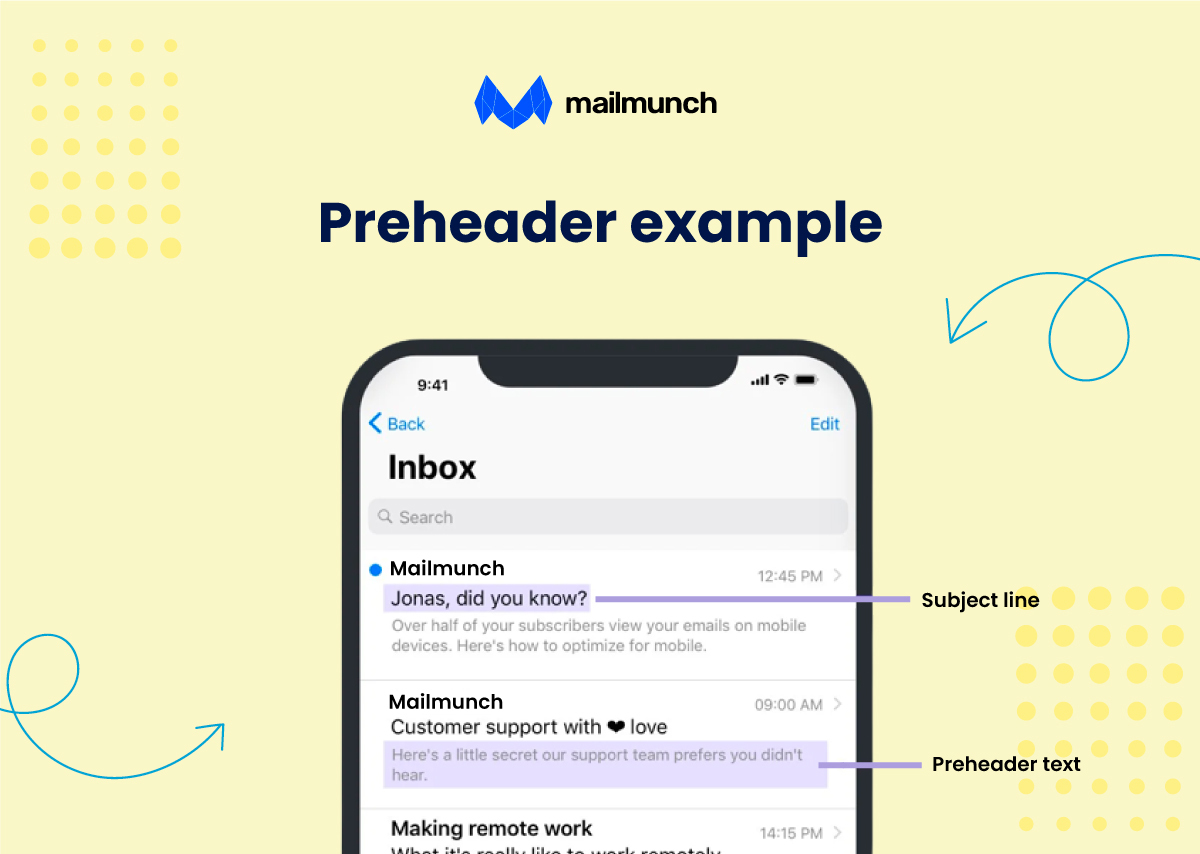

Unfortunately, the preheader or message preview part is easy to overlook in email campaigns. Users admit their oblivion to the importance of it. The little snippet of text is customizable, which works in favor of the email marketer.

But one must be careful when using preheaders in the email. When an email is previewed in Gmail, the title appears first and then the message preview. But if opening the inbox on mobile, the title will be listed with the message preview below. So you have limited characters to work with and make sure those are optimized.

A/B testing preheaders can be cumbersome because it has to be done manually. But the effort is worth it.

Take the first line of the email like this - “Hello Jay, welcome to Mailmunch! You’re joining as a premium user….”

A summary - “Welcome to Mailmunch. I’m happy to show you the way to the email templates library.”

Offer CTA - “Here’s how you can start earning revenue with emails.”

Sometimes a simple piece of text can be refreshing. A simple plain text email can work the best instead of a flashy version.

Emails convey the impression with the intent. A promotional email without a graphic would seem incomplete, but an unsubscribe email generated can work as plain text too. However, you need to A/B test the best scenarios for plain text emails that create desired results.

Hey reader!

Sounds anonymous, right? I’d have used it to greet you if I knew your name. But I don’t. Hence the reason I was cautious in personalizing the emails. Here’s the thing: not all subscribers provide their first or last name.

Using names is one way to test the emails, but there are other personalization means. For instance:

A: Start your free trial now

B: Start a free trial that converts

The use of the word convert resonates more with the user. It identifies the user's intent and gives them a reason to try the free trial.

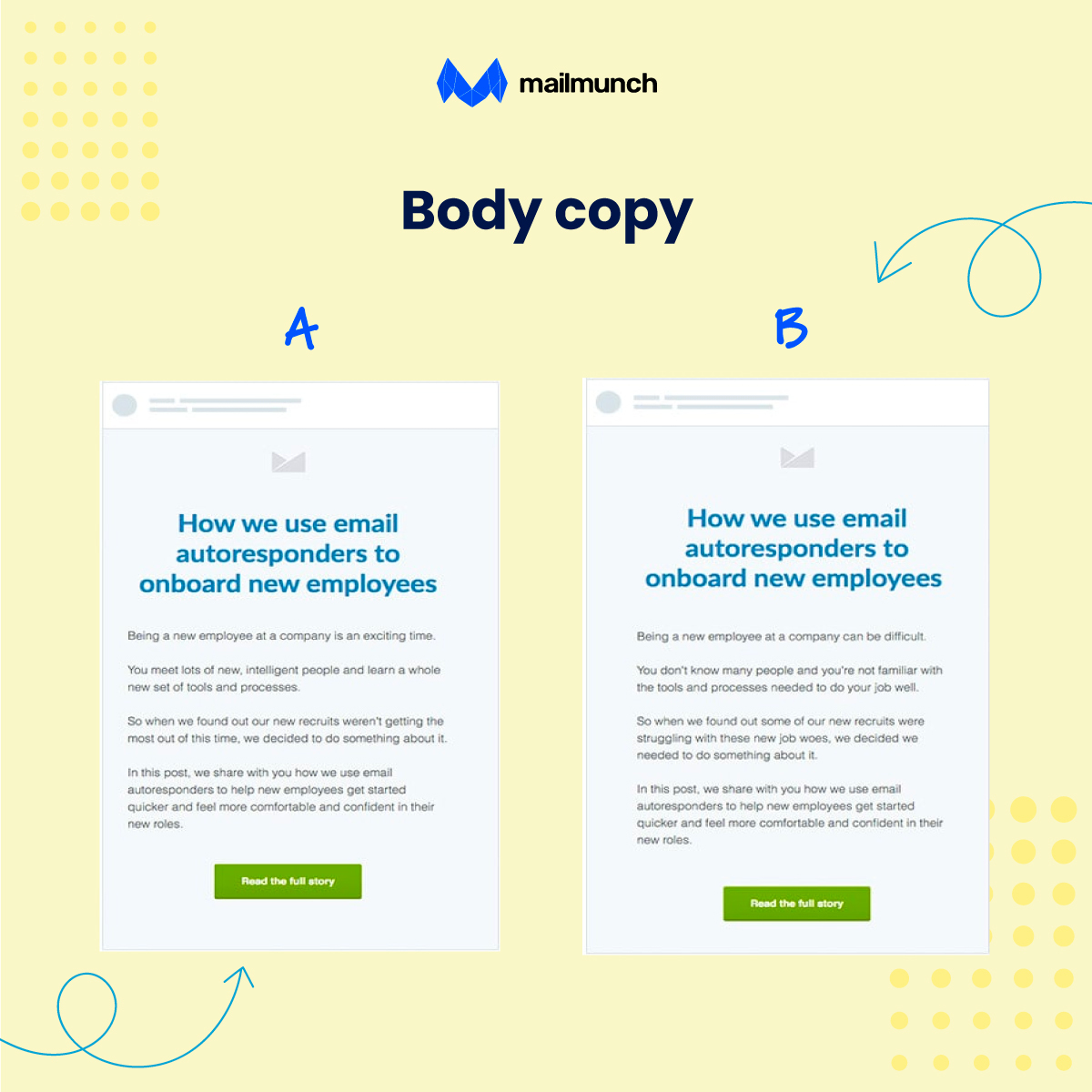

How often do you create sections and sub-sections in the email copy?

It's not a surprise to divide the content into smaller chunks. It's safe and best for every marketer to A/B test the email copy beyond the length or terms.

A new format could work…or maybe not. Instead of using the standard practice of the copy, a question-answer format can show results too.

Expected results: Doubled conversion rates!

Email marketers can increase the conversion rate with a new copy, but it is effective for as long as your intent is clear in the email.

The length of the email is a story of its own. 3-4 seconds. That’s the ideal length of time for an email to grab the reader’s attention once it’s opened. So what could grab customers’ attention right away?

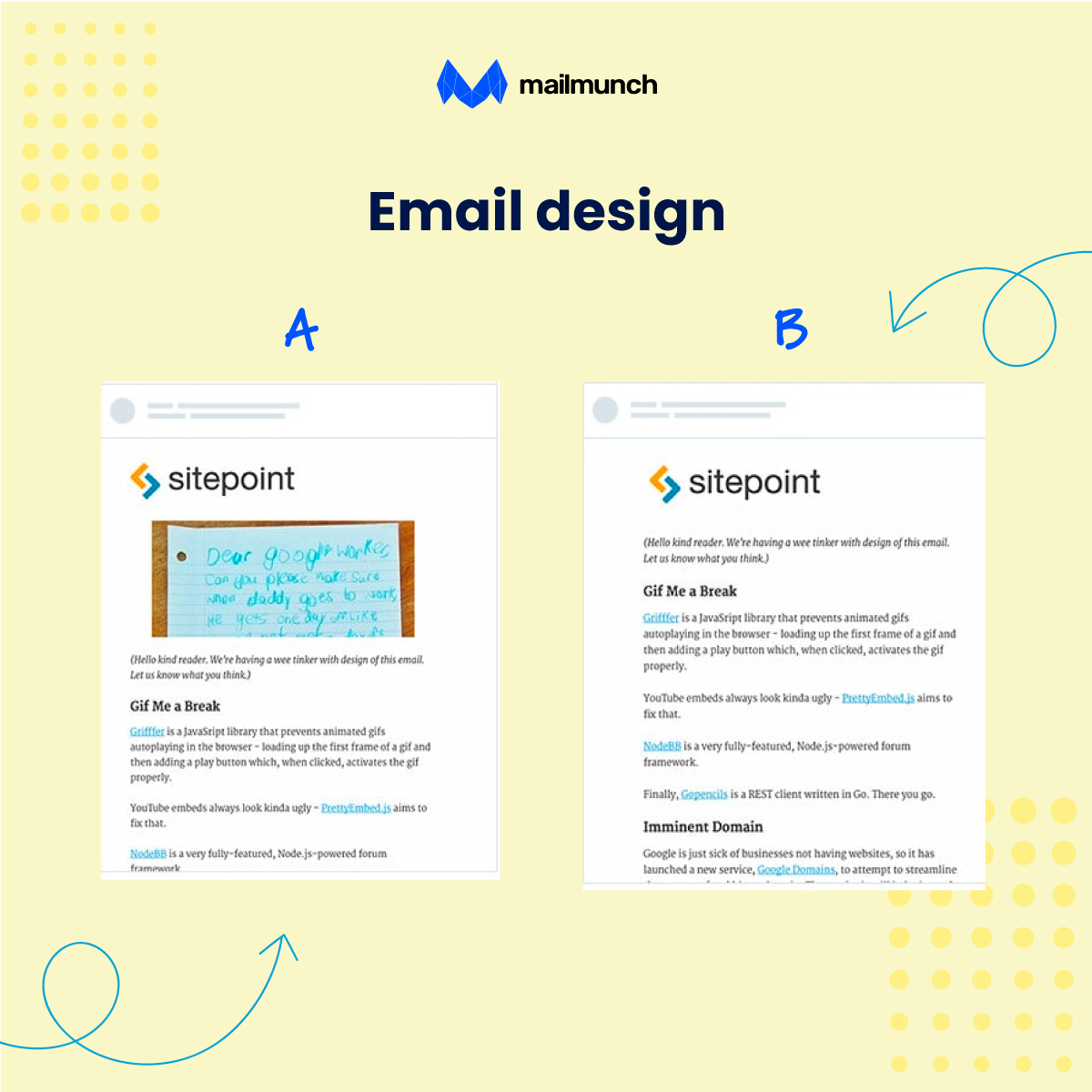

The email design plays a vital role in creating the desired appeal. Most of the emails are viewed on mobile phones. So the design should be mobile-friendly.

Test the email design to see responsiveness. It could be a larger text or CTA button that needs optimization.

Your email design can have a big impact on your click-through rate. If you're unsure what looks best, try A/B testing different designs.

For instance, the change in the graphics can create a different appeal and set the tone for the email. After A/B testing the images, you can analyze the results for click-through rates. With the A/B test, you can take care of the minute changes within the email. Sometimes, more than one image can work, or two images are required instead.

Now the placement of the images would vary according to the design, so continue testing to see which one of them is more effective.

If the customer's email client doesn't load images, add the ALT text that will appear instead of the image.

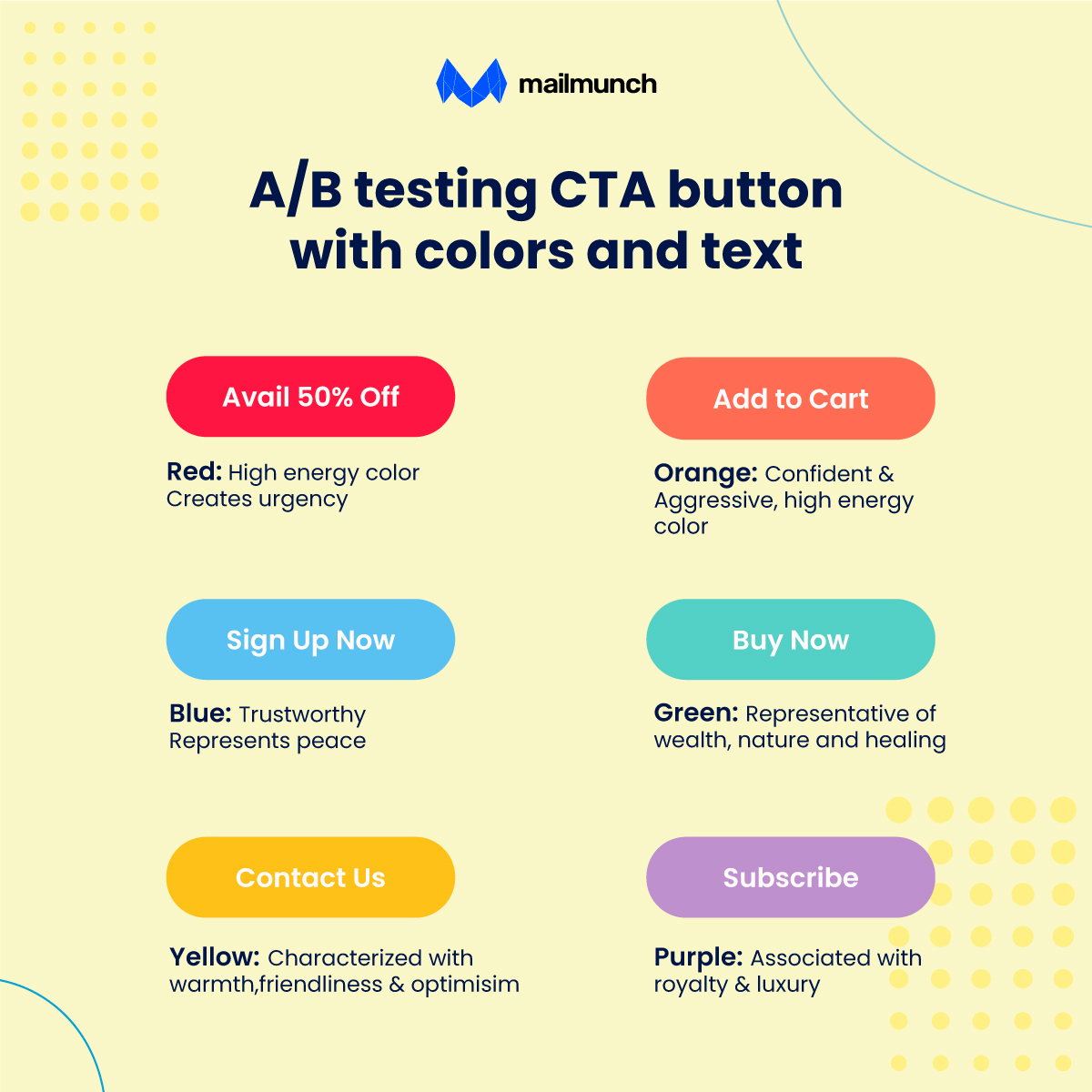

Create my account now! Or Try Mailmunch Now!”

Which of these CTAs would you click? Or maybe the goal should be about “Use email templates library now!”.

There are different ways to write a CTA. for instance, it’s not only the text but the position of the CTA that also matters. Should it be on the top? Or maybe on the bottom? Or in the middle of the email copy?

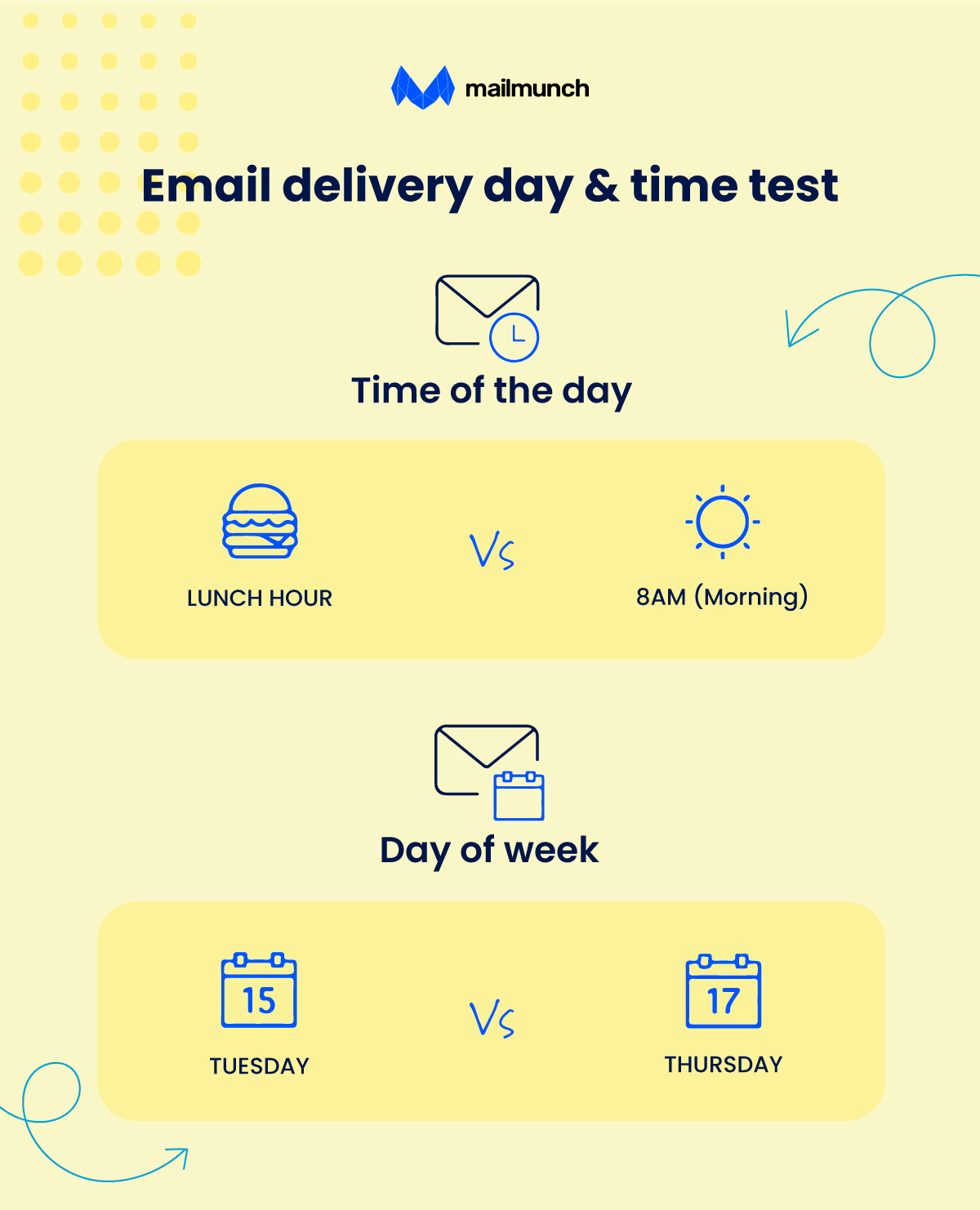

We have discussed the best time to send emails and the days briefly in our previous blogs.

Email delivery day and time profoundly affect the open rates, click-through rates, and conversions. A customer may want to open a promotional email on a Sunday at 8 p.m. if the holiday season is around the corner.

A/B testing the time and the day of the week to send emails is crucial. Tuesdays and Thursdays are considered to be the best days to send emails. Early hours or lunch breaks are the best time to send emails. Of course, you need to test which hour of the day works best for your email campaign.

Days of the week and time of the day are two common variants you need to test before sending an email campaign.

A campaign sent from the company’s email address can sound more monotone. But an email sent from a user’s email address that consists of a title and their name sounds more professional and personal. It personalizes the user experience.

To evaluate the best version, take hold of the A/B test. Testing the email address variable also lets you discover if your subscribers are attuned with the emails in their inbox or not. In most campaigns, the person responsible for the content is mentioned within the email copy, hence the name in the footer with their signature.

This way, subscribers can recognize the emails quickly.

For every test, you conduct a lookout for the winning metrics (clicks, opens, and conversions). For each test, create a record to identify the winning variants and test combinations. Calculate the percentage of the tests that can be predicted for similar emails shortly.

Keeping the data in mind, the insights about the desired metrics give you quantifiable results from a diverse user base. Based on these results you can drive results quickly.

Including too many details within an email can be an overwhelming experience but not knowing which elements work or not blindsides you. With our blog, now you have a thorough understanding of how A/B testing works.

The more you do A/B testing; the better results can be generated from email campaigns in the future. Start using small variants to test and gradually increase the pace. The only challenging part is that you may have never tried A/B testing before. So if you find something that works well in a few split tests, it’s all good.

A bookworm and a pet nerd at heart, Summra works as Content Writer at Mailmunch. She loves to play with keywords, titles, and multiple niches for B2B and B2C markets. With her 3 years of experience in creative writing and content strategy, she fancies creating compelling stories that your customers will love, igniting results for your business.

Tags:

M. Usama

April 18, 2024

.png)

M. Usama

March 21, 2024

M. Usama

March 18, 2024